Image by Author

# Introduction

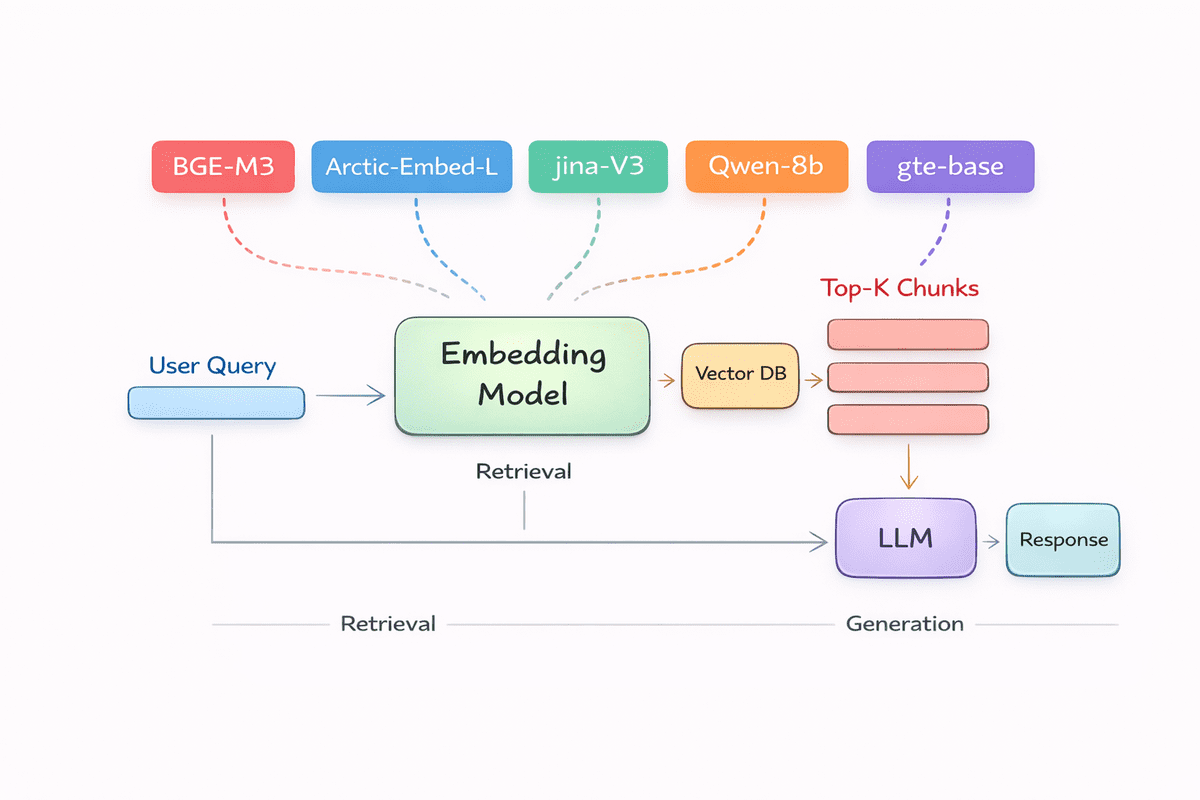

In a retrieval-augmented generation (RAG) pipeline, embedding models are the foundation that makes retrieval work. Before a language model can answer a question, summarize a document, or reason over your data, it needs a way to understand and compare meaning. That is exactly what embeddings do.

In this article, we explore the top embedding models for both English-only and multilingual performance, ranked using a retrieval-focused evaluation index. These models are highly popular, widely adopted in real-world systems, and consistently deliver accurate and reliable retrieval results across a range of RAG use cases.

Evaluation criteria:

- 60 percent performance: English retrieval quality and multilingual retrieval performance

- 30 percent downloads: Hugging Face feature extraction model downloads as a proxy for real world adoption

- 10 percent practicality: Model size, embedding dimensionality, and deployment feasibility

The final ranking favors embedding models that retrieve accurately, are actively used by teams, and can be deployed without extreme infrastructure requirements.

# 1. BAAI bge-m3

BGE-M3 is an embedding model built for retrieval-focused applications and RAG pipelines, with an emphasis on strong performance across English and multilingual tasks. It has been extensively evaluated on public benchmarks and is widely used in real-world systems, making it a reliable choice for teams that need accurate and consistent retrieval across different data types and domains.

Key features:

- Unified retrieval: Combines dense, sparse, and multi-vector retrieval capabilities in a single model.

- Multilingual support: Supports more than 100 languages with strong cross-lingual performance.

- Long-context handling: Processes long documents up to 8192 tokens.

- Hybrid search ready: Provides token-level lexical weights alongside dense embeddings for BM25-style hybrid retrieval.

- Production friendly: Balanced embedding size and unified fine-tuning make it practical to deploy at scale.

# 2. Qwen3 Embedding 8B

Qwen3-Embedding-8B is a high-end embedding model from the Qwen3 family, built specifically for text embedding and ranking workloads used in RAG and search systems. It is designed to perform strongly across retrieval-heavy tasks like document search, code search, clustering, and classification, and it has been evaluated extensively on public leaderboards where it ranks among the top models for multilingual retrieval quality.

Key features:

- Top tier retrieval quality: Ranked number 1 on the MTEB multilingual leaderboard as of June 5, 2025 with a score of 70.58

- Long context support: Handles up to 32K tokens for long-text retrieval scenarios

- Flexible embedding size: Supports user-defined embedding dimensions from 32 to 4096

- Instruction aware: Supports task-specific instructions that typically improve downstream performance

- Multilingual and code ready: Supports 100 plus languages, including strong cross-lingual and code retrieval coverage

# 3. Snowflake Arctic Embed L v2.0

Snowflake Arctic-Embed-L-v2.0 is a multilingual embedding model designed for high-quality retrieval at enterprise scale. It is optimized to deliver strong multilingual and English retrieval performance without requiring separate models, while maintaining efficient inference characteristics suitable for production systems. Released under the permissive Apache 2.0 license, Arctic-Embed-L-v2.0 is built for teams that need reliable, scalable retrieval across global datasets.

Key features:

- Multilingual without compromise: Delivers strong English and non-English retrieval, outperforming many open-source and proprietary models on benchmarks like MTEB, MIRACL, and CLEF

- Inference efficient: Uses a compact non-embedding parameter footprint for fast and cost-effective inference

- Compression friendly: Supports Matryoshka Representation Learning and quantization to reduce embeddings to as little as 128 bytes with minimal quality loss

- Drop-in compatible: Built on bge-m3-retromae, allowing direct replacement in existing embedding pipelines

- Long context support: Handles inputs up to 8192 tokens using RoPE-based context extension

# 4. Jina Embeddings V3

jina-embeddings-v3 is one of the most downloaded embedding models for text feature extraction on Hugging Face, making it a popular choice for real-world retrieval and RAG systems. It is a multilingual, multi-task embedding model designed to support a wide range of NLP use cases, with a strong focus on flexibility and efficiency. Built on a Jina XLM-RoBERTa backbone and extended with task-specific LoRA adapters, it enables developers to generate embeddings optimized for different retrieval and semantic tasks using a single model.

Key features:

- Task-aware embeddings: Uses multiple LoRA adapters to generate task-specific embeddings for retrieval, clustering, classification, and text matching

- Multilingual coverage: Supports over 100 languages, with focused tuning on 30 high-impact languages including English, Arabic, Chinese, and Urdu

- Long-context support: Handles input sequences up to 8192 tokens using Rotary Position Embeddings

- Flexible embedding sizes: Supports Matryoshka embeddings with truncation from 32 up to 1024 dimensions

- Production friendly: Widely adopted, easy to integrate with Transformers and SentenceTransformers, and supports efficient GPU inference

# 5. GTE Multilingual Base

gte-multilingual-base is a compact yet high-performance embedding model from the GTE family, designed for multilingual retrieval and long-context text representation. It focuses on delivering strong retrieval accuracy while keeping hardware and inference requirements low, making it well suited for production RAG systems that need speed, scalability, and multilingual coverage without relying on large decoder-only models.

Key features:

- Strong multilingual retrieval: Achieves state-of-the-art results on multilingual and cross-lingual retrieval benchmarks for models of similar size

- Efficient architecture: Uses an encoder-only transformer design that delivers significantly faster inference and lower hardware requirements

- Long-context support: Handles inputs up to 8192 tokens for long-document retrieval

- Elastic embeddings: Supports flexible output dimensions to reduce storage costs while preserving downstream performance

- Hybrid retrieval support: Generates both dense embeddings and sparse token weights for dense, sparse, or hybrid search pipelines

# Detailed Embedding Model Comparison

The table below provides a detailed comparison of leading embedding models for RAG pipelines, focusing on context handling, embedding flexibility, retrieval capabilities, and what each model does best in practice.

| Model | Max Context Length | Embedding Output | Retrieval Capabilities | Key Strengths |

|---|---|---|---|---|

| BGE-M3 | 8,192 tokens | 1,024 dims | Dense, sparse, and multi-vector retrieval | Unified hybrid retrieval in a single model |

| Qwen3-Embedding-8B | 32,000 tokens | 32 to 4,096 dims (configurable) | Dense embeddings with instruction-aware retrieval | Top-tier retrieval accuracy on long and complex queries |

| Arctic-Embed-L-v2.0 | 8,192 tokens | 1,024 dims (MRL compressible) | Dense retrieval | High-quality retrieval with strong compression support |

| jina-embeddings-v3 | 8,192 tokens | 32 to 1,024 dims (Matryoshka) | Task-specific dense retrieval via LoRA adapters | Flexible multi-task embeddings with minimal overhead |

| gte-multilingual-base | 8,192 tokens | 128 to 768 dims (elastic) | Dense and sparse retrieval | Fast, efficient retrieval with low hardware requirements |

Abid Ali Awan (@1abidaliawan) is a certified data scientist professional who loves building machine learning models. Currently, he is focusing on content creation and writing technical blogs on machine learning and data science technologies. Abid holds a Master’s degree in technology management and a bachelor’s degree in telecommunication engineering. His vision is to build an AI product using a graph neural network for students struggling with mental illness.