TL;DR

If you want alignment fast, standardize on this hierarchy and ownership split:

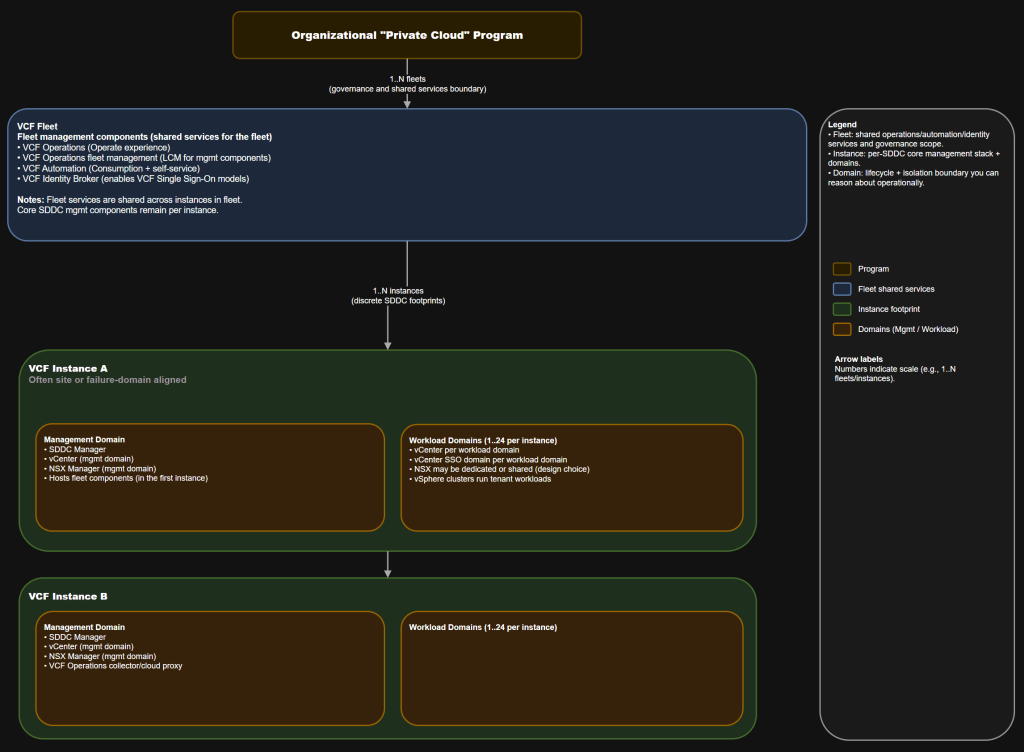

- Organizational private cloud (program term) -> VCF Fleet -> VCF Instance -> VCF Domains (management and workload) -> vSphere clusters

- A fleet is the boundary for shared fleet services (operations, automation, identity, governance). It is not “one shared SDDC management plane.”

- An instance is the boundary for a discrete SDDC footprint with its own management domain and workload domains.

- A domain is your lifecycle and isolation unit. It is where you place blast radius and change windows on purpose.

- Scope and code levels referenced in this post (VCF 9.0 GA BOM):

- VCF 9.0 Release: Build 24755599

- VCF Installer: 9.0.1.0 Build 24962180 (required to deploy VCF 9.0.0.0 components)

- SDDC Manager: 9.0.0.0 Build 24703748

- vCenter: 9.0.0.0 Build 24755230

- ESX: 9.0.0.0 Build 24755229

- NSX: 9.0.0.0 Build 24733065

- VCF Operations: 9.0.0.0 Build 24695812

- VCF Operations fleet management: 9.0.0.0 Build 24695816

- VCF Automation: 9.0.0.0 Build 24701403

- VCF Identity Broker: 9.0.0.0 Build 24695128

Architecture Diagram

Table of Contents

- Scenario

- Series map

- Assumptions

- Core vocabulary your org should standardize

- The hierarchy that prevents org-wide confusion

- What the fleet actually owns vs what stays per instance

- Day-0, day-1, day-2 map

- Challenge: one private cloud vs multiple fleets

- Deployment posture patterns

- Who owns what

- Failure domain analysis

- Anti-patterns

- Summary and Takeaways

- Conclusion

Scenario

You need architects, operations, and leadership to answer the same questions the same way:

- “What is VCF actually managing?”

- “What breaks if something fails?”

- “Who is on point when something needs to change?”

- “How do we scale without turning upgrades into drama?”

If you do not standardize vocabulary, you end up with:

- Fleet and instance used as synonyms.

- Change windows scheduled at the wrong layer.

- Mis-scoped identity decisions that are expensive to unwind.

- Incorrect assumptions about blast radius.

Series map

This topic is usually too big for one post, so here is the split:

- Part 1 (this post): Vocabulary, hierarchy, and ownership boundaries that stop misunderstandings.

- Part 2: The new management layer in VCF 9.0: VCF Installer, VCF Operations fleet management, and where lifecycle actually runs.

- Part 3: Topology patterns and isolation: single site, two sites in one region, multi-region, and the identity options that go with them.

- Later: Brownfield converge/import from a large vSphere estate.

Assumptions

- You are greenfield for VCF 9.0 GA.

- You plan to deploy both VCF Operations and VCF Automation from day-1, not phased.

- You want patterns for single site, two sites in one region, and multi-region.

- You may need either:

- Shared SSO boundary (single enterprise),

- Separate SSO boundaries (regulated isolation or hard multi-tenancy).

Core vocabulary your org should standardize

Use these terms consistently. Treat anything else as “slang” that must map back here.

| Term | What it means in your operating model | What it is not |

|---|---|---|

| Organizational private cloud | Your program label for “the service you provide” (budget, roadmap, stakeholders) | Not a VCF object you create in the UI |

| VCF fleet | Governance and shared-services boundary for fleet management components | Not “one shared vCenter” |

| Fleet management components | Shared services for operations, lifecycle, automation, identity | Not the same thing as SDDC Manager |

| VCF instance | A discrete VCF deployment footprint (management domain + workload domains) | Not a tenant boundary by default |

| VCF domain | Lifecycle and isolation boundary (management domain or workload domain) | Not always a “site” |

| Management domain | A domain that hosts the instance’s core management components (and fleet components for the first instance) | Not “never runs workloads” (you should still avoid putting random workloads here) |

| Workload domain | A domain built to run consumer workloads | Not a catch-all dumping ground for everything |

| vSphere cluster | The scale unit inside a domain | Not the lifecycle boundary for VCF components |

Recommended internal phrasing

This is the phrasing that reduces ambiguity in meetings:

- Fleet = shared fleet services and governance scope

- Instance = discrete VI footprint with its own core management stack

- Domain = lifecycle and workload isolation boundary

- Cluster = capacity and scaling unit

The hierarchy that prevents org-wide confusion

You are building a private cloud, but you manage fleets, instances, and domains

Leadership will say “private cloud.” That is fine.

Your platform team must translate that into platform objects:

If you do not do this translation explicitly, you will end up with “one private cloud” being interpreted as “one vCenter,” which is the wrong mental model in VCF 9.0.

The hierarchy you should teach

- Fleet is the umbrella for shared services and governance.

- Instances are discrete deployments under that umbrella.

- Domains are where lifecycle and isolation live.

- Clusters are where you scale capacity.

This is the hierarchy you use in architecture reviews, operational runbooks, incident triage, and change calendars.

What the fleet actually owns vs what stays per instance

This is the most important correction to get right.

Fleet scope

The fleet gives you shared services across multiple instances, typically including:

- VCF Operations as the day-to-day operate interface.

- VCF Operations fleet management for lifecycle of management components (download binaries, deploy, patch, upgrade, backup workflows).

- VCF Automation for self-service consumption and governance.

- VCF Identity Broker to enable VCF Single Sign-On models (optional designs, but still a fleet concern).

Operational implication:

- A fleet issue impacts visibility, governance, lifecycle workflows, and self-service.

- A fleet issue does not delete your vCenter or turn off your ESX hosts.

Instance scope

Each instance remains a discrete SDDC management footprint:

- SDDC Manager (instance lifecycle and orchestration)

- vCenter for the management domain

- NSX for the management domain

- Domain-level vCenter and NSX as designed

Operational implication:

- If an instance’s SDDC Manager or management domain is down, you lose instance-level lifecycle and potentially some management operations.

- Workloads may continue running, but your ability to change and recover cleanly is reduced.

Domain scope

Domains are where you place:

- Lifecycle boundaries

- Change windows

- Isolation boundaries

- Blast radius boundaries

In VCF 9.0, a workload domain commonly has:

- Its own vCenter

- Its own vCenter SSO domain

- NSX that may be dedicated to the domain or shared (design choice)

Operational implication:

- Domain-level incidents are the “tenant impact” layer.

- Domain-level upgrades can be scheduled per domain, not “everything at once.”

Day-0, day-1, day-2 map

This is the “where do we do the thing” map you want in every runbook.

Day-0 design decisions

These choices are difficult or expensive to reverse later:

- Fleet count and scope (how many governance boundaries you want)

- Instance alignment strategy (site, region, failure domain, or org boundary)

- Domain strategy (how many workload domains, what is isolated)

- Identity model choice (shared vs separate SSO boundaries)

- Network segmentation for management components and workload domains

- Backup and restore architecture for fleet management components

Day-0 outcome you want:

- You can answer “what is the blast radius if X fails?” without hand-waving.

Day-1 bring-up

Day-1 is about standing up the first production-quality slice:

- Deploy the first fleet (which includes deploying the first instance with its initial management domain and hosting fleet components in that initial management domain)

- Deploy additional instances (if needed)

- Create initial workload domain(s)

- Deploy and integrate VCF Operations and VCF Automation (your chosen posture is “both from day-1”)

Day-1 outcome you want:

- A working operating model where platform team, VI admin, and app/platform teams can each do their jobs without stepping on each other.

Day-2 operations

Day-2 is where most pain lives if the model is wrong:

- Lifecycle operations (patch, upgrade, drift management)

- Capacity management and domain scaling

- Identity lifecycle (cert rotation, provider changes, recovery)

- Adding instances to a fleet

- Adding workload domains and mapping them into your cloud org structure

- Incident response based on correct blast radius

Day-2 outcome you want:

- Changes are scoped to the right layer, and rollback paths are clear.

Challenge: one private cloud vs multiple fleets

The challenge

You want “one private cloud” from a service catalog perspective, but you also need:

- Scale

- Isolation

- Independent change windows

- Regulatory separation (sometimes)

In VCF terms, that means deciding how many fleets you run.

Solutions

Solution A: One private cloud program, one fleet

Best when:

- You have one enterprise governance boundary.

- You want the simplest operating model.

- You can tolerate a single fleet services layer as a shared dependency.

Tradeoffs:

- Fleet services outage affects the entire program’s visibility and provisioning workflows.

- Change windows for fleet services are shared.

Solution B: One private cloud program, multiple fleets

Best when:

- You have regulated tenants or business units requiring separation.

- You want different change windows per fleet.

- You want to reduce fleet-level blast radius.

Tradeoffs:

- You run multiple copies of fleet services (more overhead).

- You must standardize your cross-fleet operating model (naming, RBAC, monitoring, backup).

Solution C: Multiple private cloud programs, multiple fleets

Best when:

- You have hard organizational separation (budget, risk, compliance, separate leadership).

- You expect different service tiers and roadmaps.

Tradeoffs:

- Highest overhead.

- Harder to create consistent enterprise-wide consumption patterns.

Architecture tradeoff matrix

| Decision | Isolation | Scale | Ops overhead | Upgrade coordination | Typical use case |

|---|---|---|---|---|---|

| One fleet | Medium | High | Lowest | Highest coupling at fleet layer | Single enterprise platform |

| Multiple fleets | High | High | Medium to high | Independent per fleet | Regulated isolation, large enterprises |

| Multiple programs | Very high | Varies | Highest | Fully independent | Separate business entities |

Deployment posture patterns

Single site

A practical default:

- One fleet

- One instance

- One management domain

- Multiple workload domains aligned to isolation needs (prod vs non-prod, regulated vs general, platform vs apps)

Operational posture:

- Simple identity.

- Simple change calendar.

- Domain boundaries do most of the work for isolation.

Two sites in one region

Your main design fork is: “Do I want a single operational footprint or two?”

Common patterns:

- One fleet, two instances (one per site)

- Clear site-level failure domains.

- Fleet services are still shared.

- One fleet, one instance with stretched constructs (only if your latency and storage architecture supports it)

- Can simplify some operations.

- Stretched designs raise day-2 complexity and recovery requirements.

Operational posture:

- Instance boundaries are often your “site boundary.”

- Domains remain your “tenant and lifecycle boundary.”

Multi-region

You are balancing:

- WAN dependency

- Fleet services blast radius

- Identity and compliance boundaries

Common patterns:

- One fleet, multiple instances across regions

- Central governance, but higher WAN dependency.

- Multiple fleets (often one per region)

- Lower regional coupling.

- Better regulated isolation story.

Operational posture:

- Multi-fleet becomes attractive when you want regional autonomy and reduced shared dependency.

Who owns what

This is the “stop paging the wrong team” table.

| Scope | Platform team (cloud foundation) | VI admin (infrastructure) | App/platform teams (consumers) |

|---|---|---|---|

| Fleet services (VCF Operations, fleet management, Automation, Identity Broker) | Own and operate. Standards, backups, certs, lifecycle, RBAC | Support integration points for their instances | Consume. No admin of fleet services |

| Fleet topology (how many fleets, where) | Accountable decision | Consulted | Informed |

| Instance management stack (SDDC Manager, mgmt vCenter, mgmt NSX) | Guardrails and standards | Own and operate day-2 | Not responsible |

| Domain lifecycle (create, patch, expand, decommission) | Guardrails and patterns | Execute and operate | Consume outcomes |

| Workload operations inside guest OS and Kubernetes | Not responsible | Not responsible | Own and operate |

Design-time vs day-2 ownership

- Design-time: Platform team owns topology and identity model decisions.

- Day-2: VI admins own SDDC-level lifecycle execution. Platform team owns fleet services lifecycle. App/platform teams own workload lifecycle inside the platform.

Failure domain analysis

Use this to set expectations with leadership.

Fleet services failure

What breaks:

- Centralized visibility and governance

- Automation workflows and self-service

- Fleet-level lifecycle workflows

What usually keeps running:

- Existing workloads in vSphere clusters

- Core vCenter and ESX operations inside an instance (depending on what is down)

Instance management domain failure

What breaks:

- Instance-level lifecycle orchestration

- Some management operations for domains in that instance

- Potentially NSX control plane for that instance if scoped there

What might keep running:

- Workloads already running (until something requires management intervention)

Workload domain failure

What breaks:

- Workloads and services in that domain

- Tenant-specific operations

What keeps running:

- Other domains in the instance

- Other instances in the fleet

Anti-patterns

These are the patterns that create long-term operational pain.

- Treating fleet and instance as synonyms.

- Planning change windows at “the vCenter level” instead of domain and fleet services levels.

- Putting regulated tenants into the same fleet without a governance and identity strategy.

- Running meaningful production workloads in the management domain because “there is spare capacity.”

- Out-of-band changes without an inventory and drift practice.

Summary and Takeaways

If you want the org aligned, lock in these rules:

- Fleet is a shared services and governance boundary, not a shared vCenter boundary.

- Instance is your discrete SDDC footprint boundary.

- Domain is your lifecycle, isolation, and blast radius boundary.

- “Private cloud” is what you call the program. Fleets, instances, and domains are what you operate.

- If you standardize vocabulary now, you will reduce friction in design reviews, incident triage, and change governance.

Conclusion

VCF 9.0 becomes easier to operate when you stop thinking “one vCenter” and start thinking in fleet, instance, and domain boundaries.

When you do that, you get:

- Clear ownership and escalation paths

- Predictable blast radius

- Change windows that match the topology

- A platform posture that scales from single site to multi-region without rewriting your operating model