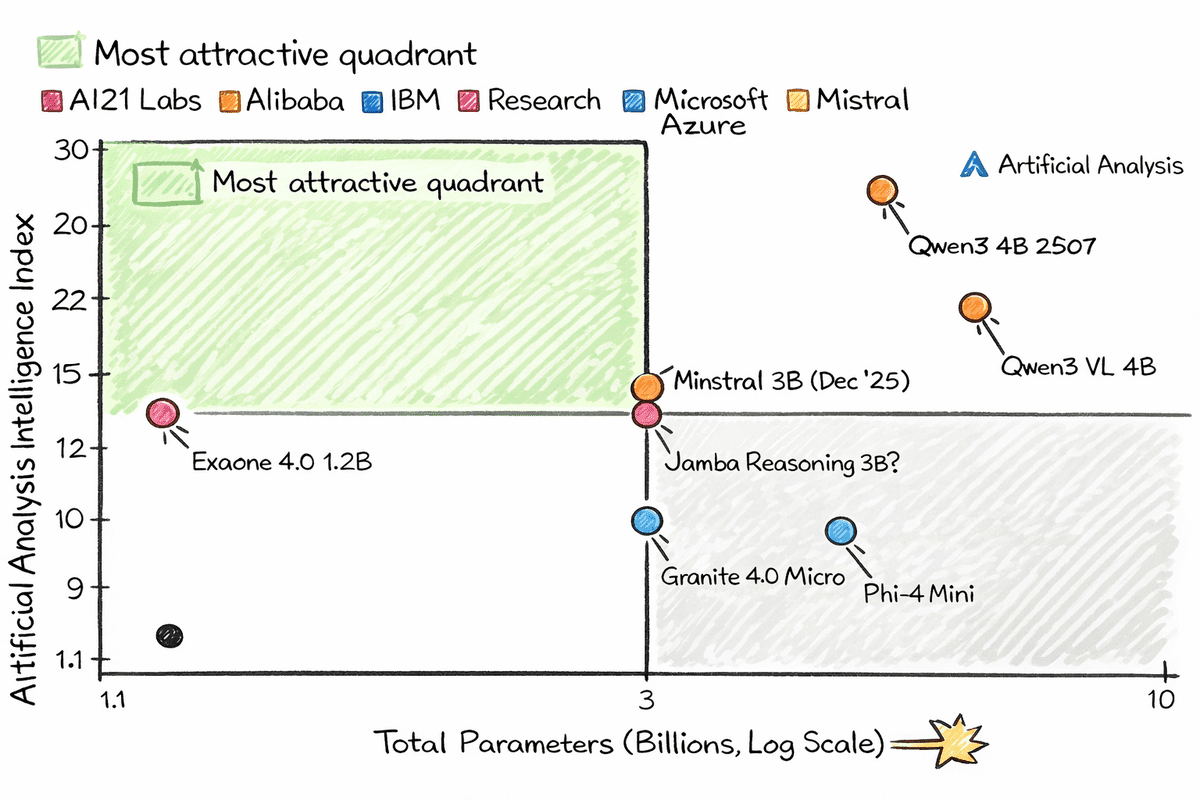

Image based on Artificial Analysis

# Introduction

We often talk about small AI models. But what about tiny models that can actually run on a Raspberry Pi with limited CPU power and very little RAM?

Thanks to modern architectures and aggressive quantization, models around 1 to 2 billion parameters can now run on extremely small devices. When quantized, these models can run almost anywhere, even on your smart fridge. All you need is llama.cpp, a quantized model from the Hugging Face Hub, and a simple command to get started.

What makes these tiny models exciting is that they are not weak or outdated. Many of them outperform much older large models in real-world text generation. Some also support tool calling, vision understanding, and structured outputs. These are not small and dumb models. They are small, fast, and surprisingly intelligent, capable of running on devices that fit in the palm of your hand.

In this article, we will explore 7 tiny AI models that run well on a Raspberry Pi and other low-power machines using llama.cpp. If you want to experiment with local AI without GPUs, cloud costs, or heavy infrastructure, this list is a great place to start.

# 1. Qwen3 4B 2507

Qwen3-4B-Instruct-2507 is a compact yet highly capable non-thinking language model that delivers a major leap in performance for its size. With just 4 billion parameters, it shows strong gains across instruction following, logical reasoning, mathematics, science, coding, and tool usage, while also expanding long-tail knowledge coverage across many languages.

The model demonstrates notably improved alignment with user preferences in subjective and open-ended tasks, resulting in clearer, more helpful, and higher-quality text generation. Its support for an impressive 256K native context length allows it to handle extremely long documents and conversations efficiently, making it a practical choice for real-world applications that demand both depth and speed without the overhead of larger models.

# 2. Qwen3 VL 4B

Qwen3‑VL‑4B‑Instruct is the most advanced vision‑language model in the Qwen family to date, packing state‑of‑the‑art multimodal intelligence into a highly efficient 4B‑parameter form factor. It delivers superior text understanding and generation, combined with deeper visual perception, reasoning, and spatial awareness, enabling strong performance across images, video, and long documents.

The model supports native 256K context (expandable to 1M), allowing it to process entire books or hours‑long videos with accurate recall and fine‑grained temporal indexing. Architectural upgrades such as Interleaved‑MRoPE, DeepStack visual fusion, and precise text–timestamp alignment significantly improve long‑horizon video reasoning, fine‑detail recognition, and image–text grounding

Beyond perception, Qwen3‑VL‑4B‑Instruct functions as a visual agent, capable of operating PC and mobile GUIs, invoking tools, generating visual code (HTML/CSS/JS, Draw.io), and handling complex multimodal workflows with reasoning grounded in both text and vision.

# 3. Exaone 4.0 1.2B

EXAONE 4.0 1.2B is a compact, on‑device–friendly language model designed to bring agentic AI and hybrid reasoning into extremely resource‑efficient deployments. It integrates both non‑reasoning mode for fast, practical responses and an optional reasoning mode for complex problem solving, allowing developers to trade off speed and depth dynamically within a single model.

Despite its small size, the 1.2B variant supports agentic tool use, enabling function calling and autonomous task execution, and offers multilingual capabilities in English, Korean, and Spanish, extending its usefulness beyond monolingual edge applications.

Architecturally, it inherits EXAONE 4.0’s advances such as hybrid attention and improved normalization schemes, while supporting a 64K token context length, making it unusually strong for long‑context understanding at this scale

Optimized for efficiency, it is explicitly positioned for on‑device and low‑cost inference scenarios, where memory footprint and latency matter as much as model quality.

# 4. Ministral 3B

Ministral-3-3B-Instruct-2512 is the smallest member of the Ministral 3 family and a highly efficient tiny multimodal language model purpose‑built for edge and low‑resource deployment. It is an FP8 instruct‑fine‑tuned model, optimized specifically for chat and instruction‑following workloads, while maintaining strong adherence to system prompts and structured outputs

Architecturally, it combines a 3.4B‑parameter language model with a 0.4B vision encoder, enabling native image understanding alongside text reasoning.

Despite its compact size, the model supports a large 256K context window, robust multilingual coverage across dozens of languages, and native agentic capabilities such as function calling and JSON output, making it well suited for real‑time, embedded, and distributed AI systems.

Designed to fit within 8GB of VRAM in FP8 (and even less when quantized), Ministral 3 3B Instruct delivers strong performance per watt and per dollar for production use cases that demand efficiency without sacrificing capability

# 5. Jamba Reasoning 3B

Jamba-Reasoning-3B is a compact yet exceptionally capable 3‑billion‑parameter reasoning model designed to deliver strong intelligence, long‑context processing, and high efficiency in a small footprint.

Its defining innovation is a hybrid Transformer–Mamba architecture, where a small number of attention layers capture complex dependencies while the majority of layers use Mamba state‑space models for highly efficient sequence processing.

This design dramatically reduces memory overhead and improves throughput, enabling the model to run smoothly on laptops, GPUs, and even mobile‑class devices without sacrificing quality.

Despite its size, Jamba Reasoning 3B supports 256K token contexts, scaling to very long documents without relying on massive attention caches, which makes long‑context inference practical and cost‑effective

On intelligence benchmarks, it outperforms comparable small models such as Gemma 3 4B and Llama 3.2 3B on a combined score spanning multiple evaluations, demonstrating unusually strong reasoning ability for its class.

# 6. Granite 4.0 Micro

Granite-4.0-micro is a 3B‑parameter long‑context instruct model developed by IBM’s Granite team and designed specifically for enterprise‑grade assistants and agentic workflows.

Fine‑tuned from Granite‑4.0‑Micro‑Base using a blend of permissively licensed open datasets and high‑quality synthetic data, it emphasizes reliable instruction following, professional tone, and safe responses, reinforced by a default system prompt added in its October 2025 update.

The model supports a very large 128K context window, strong tool‑calling and function‑execution capabilities, and broad multilingual support spanning major European, Middle Eastern, and East Asian languages.

Built on a dense decoder‑only transformer architecture with modern components such as GQA, RoPE, SwiGLU MLPs, and RMSNorm, Granite‑4.0‑Micro balances robustness and efficiency, making it well suited as a foundation model for business applications, RAG pipelines, coding tasks, and LLM agents that must integrate cleanly with external systems under an Apache 2.0 open‑source license.

# 7. Phi-4 Mini

Phi-4-mini-instruct is a lightweight, open 3.8B‑parameter language model from Microsoft designed to deliver strong reasoning and instruction‑following performance under tight memory and compute constraints.

Built on a dense decoder‑only Transformer architecture, it is trained primarily on high‑quality synthetic “textbook‑like” data and carefully filtered public sources, with a deliberate emphasis on reasoning‑dense content over raw factual memorization.

The model supports a 128K token context window, enabling long‑document understanding and extended conversations uncommon at this scale.

Post‑training combines supervised fine‑tuning and direct preference optimization, resulting in precise instruction adherence, robust safety behavior, and effective function calling.

With a large 200K‑token vocabulary and broad multilingual coverage, Phi‑4‑mini‑instruct is positioned as a practical building block for research and production systems that must balance latency, cost, and reasoning quality, particularly in memory‑ or compute‑constrained environments.

# Final Thoughts

Tiny models have reached a point where size is no longer a limitation to capability. The Qwen 3 series stands out in this list, delivering performance that rivals much larger language models and even challenges some proprietary systems. If you are building applications for a Raspberry Pi or other low-power devices, Qwen 3 is an excellent starting point and well worth integrating into your setup.

Beyond Qwen, the EXAONE 4.0 1.2B models are particularly strong at reasoning and non-trivial problem solving, while remaining significantly smaller than most alternatives. The Ministral 3B also deserves attention as the latest release in its series, offering an updated knowledge cutoff and solid general-purpose performance.

Overall, many of these models are impressive, but if your priorities are speed, accuracy, and tool calling, the Qwen 3 LLM and VLM variants are hard to beat. They clearly show how far tiny, on-device AI has come and why local inference on small hardware is no longer a compromise.

Abid Ali Awan (@1abidaliawan) is a certified data scientist professional who loves building machine learning models. Currently, he is focusing on content creation and writing technical blogs on machine learning and data science technologies. Abid holds a Master’s degree in technology management and a bachelor’s degree in telecommunication engineering. His vision is to build an AI product using a graph neural network for students struggling with mental illness.